Overview

Introduction to STADLE

Our STADLE is a paradigm-shifting intelligence centric platform for federated, collaborative, and continuous learning. STADLE stands for Scalable Traceable Adaptive Distributed Learning platform.

In particular, Federated learning, the core capability of STADLE, continuously collects Machine Learning models from distributed devices or clients, aggregates the collective intelligence, and brings it back to the local devices. Therefore, Federated Learning (FL) using our STADLE solves the fundamental problems that commonly appear in ML systems such as

Privacy: FL does not require users to upload raw data to cloud servers, thus it improves the privacy-preserving aspect of AI systems by not collecting personal or sensitive data in the cloud.

Learning Efficiency: Training a huge amount of data in a centralized manner is really inefficient sometimes. With distributed learning framework life federated learning, you can efficiently utilize any distributed resources to make learning faster and more inexpensive.

Real-Time Intelligence: It often takes a lot of time to deliver desired intelligence, and sometimes all the value is gone by the time it is delivered. FL usually does not have to require a huge data pipeline, computational resources, and storages to deliver high-quality intelligence.

Communication Load: The amount of traffic generated by FL dramatically decreases from classical AI systems due to the difference in data type exchanged.

Low Latency: The delay in communication to obtain collective intelligence can be dramatically reduced by employing decentralized FL servers located at edge servers.

Our STADLE platform is horizontally designed and enhances the capability of FL.

Scalability: Decentralized FL servers in STADLE realize the load-balancing to accommodate more users and create semi-global models which do not require a central master aggregator node.

Traceability: Our platform has the performance tracking capability that monitors and manages the transition of collective intelligence models in the decentralized system.

Usability: Our SaaS platform (stadle.ai) provides GUI for users to keep track of the distributed learning process as well as manage and control the aggregators and agents intuitively.

Resiliency: In the federated learning process, systems often fail and are disconnected from each other. The STADLE ensures the continuous learning that is critical to the operation in real settings for many applications.

Adaptability: Static intelligence produced by big data systems gets outdated and underperforms easily while the adaptive intelligence is created by a well designed distributed resilient learning framework that perfectly sync up the local and global models.

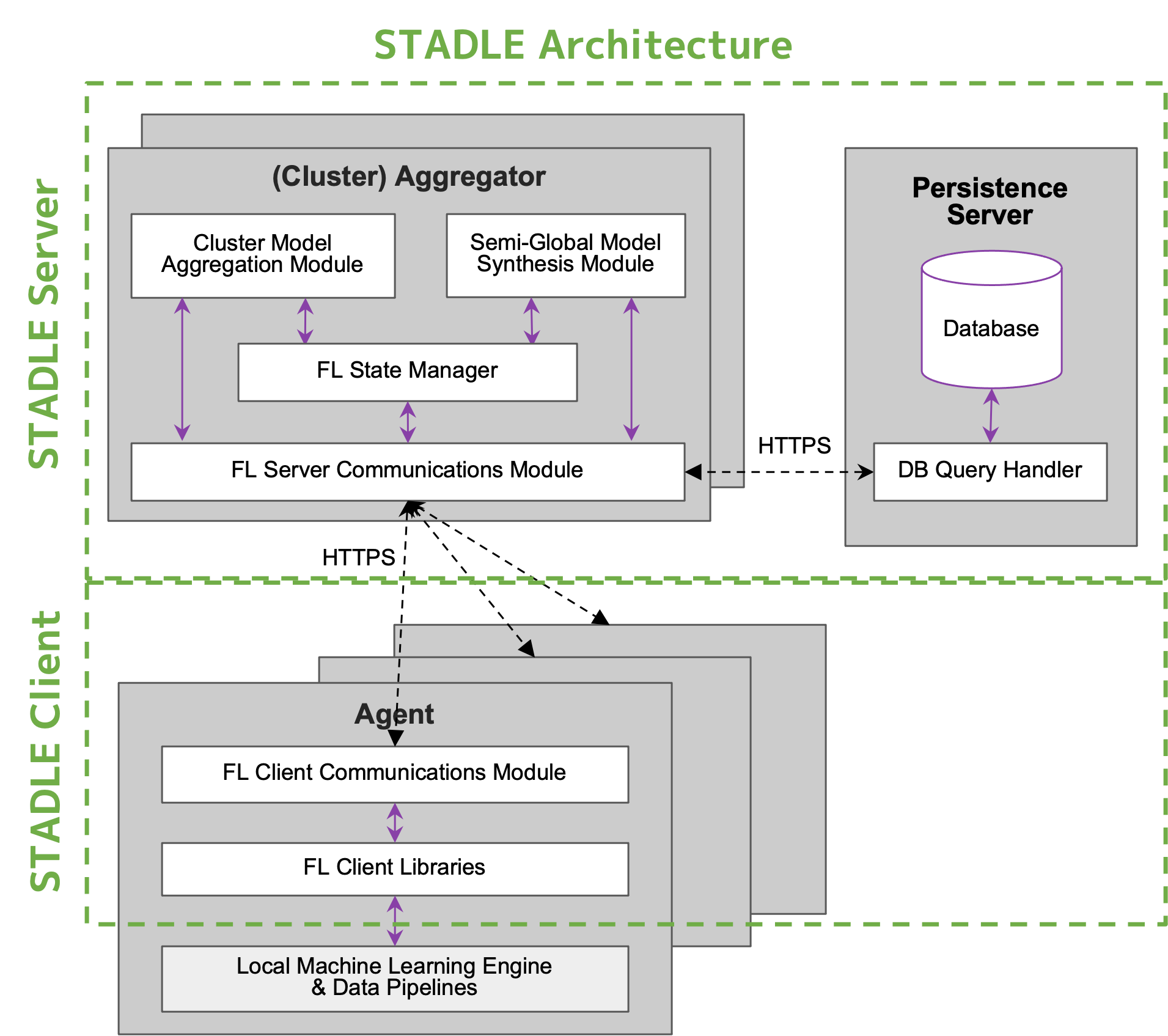

STADLE Architecture

There are 3 main components in STADLE.

Persistence Server

A core functionality that helps keeping track of various database entries and ML models.

Packaged as a command inside the stadle library that can be used as stadle persistence-server [args] in a terminal.

(Cluster) Aggregator

A core FL server functionality that accepts ML models from distributed agents and conducts aggregation processes.

Packaged as a command inside the stadle library that can be used as stadle aggregator [args] in a terminal.

(Distributed) Agent

A core functionality and libraries that help integrating the local ML engine and/or models into the STADLE platform.

In charge of communicating with stadle core functions.

Packaged inside the stadle-client library as a class that can be used as from stadle import BasicClient / IntegratedClient in the local ML code.

class BasicClient / IntegratedClient is used to integrate training, testing, validation functions of the local ML process.

All those components are connected using HTTPS and exchange machine learning models with each other.

Initial Base Model Upload Process

The first step of running a federated learning process is to register the initial ML model which we call a Base Model. The architecture of the base model will be used in the entire process of FL by all the aggregators and agents. We call the agent that uploads the initial base model an admin agent. The base model info could include the ML model itself as well as the model type such as PyTorch, the time it was generated, the initial performance data, etc. The base model can be also used as the very first semi-global model (SG model) to be downloaded by the other agents. This process can happen just once unless you want to start a new federated learning process from the beginning.

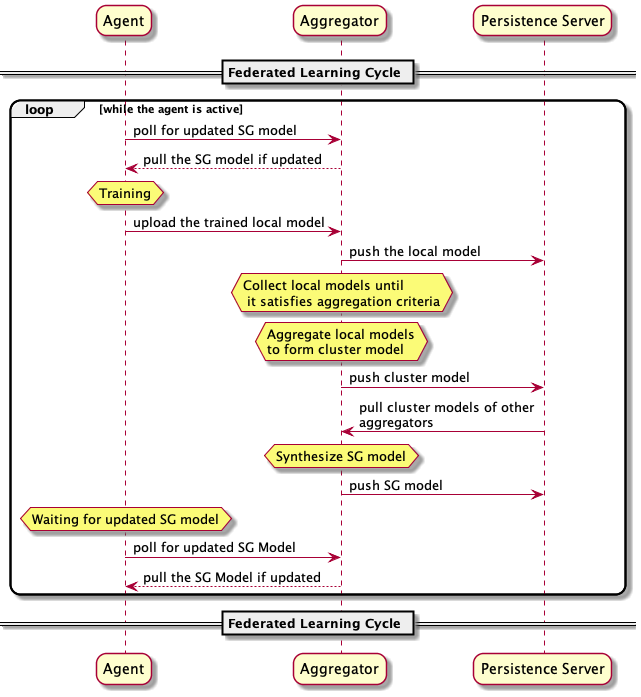

Federated Learning Cycle with STADLE

Figure below is the federated learning cycle showing the overall process of how federated learning is continuously conducted between an aggregator and an agent. Here it only describes a single-agent case, but in real case and operation, there are many agents dispersed into distributed devices.

The agents other than the admin agent will request the global model that is an updated federated ML model in order to train it locally with local data or deploy it to its own application.

Once the agent gets the updated model from the aggregator and deploys it, the agent basically procees to “training” to retrain the ML model locally with new data that is obtained afterwards. Again, these local data will not be shared with the aggregator and stay local within the distributed devices.

After training the local ML model (that of course has the same architecture as the global/base model of the federated learning), the agent calls FL client API to send the model to the aggregator.

Aggregator receives the model and pushes the model to the database. The aggregator keeps track of the number of collected ML models and it will keep accepting the local ML models as long as the federation round is open. The round can be closed with any defined criteria such as the aggregator receiving enough ML models to be federated. When the criteria are met, the aggregator aggregates the local ML models and produces an updated cluster global model.

Then, the aggregator starts to collect other cluster models formed by other aggregators to synthesize a semi-global model (SG model), and the SG model is the one that is sent back to agents. If there is only one aggregator, the SG model is going to be the same as the cluster model formed by the aggregator.

During that process above, agents constantly keep polling to the aggregator if the SG model is realy or not. Then, the updated SG model is sent back to the agent.

After receiving the updated SG model, the agent deploys and retrains it whenever that is ready and repeats this process until the termination criteria are met for the federated learning. In many cases, there are no termination conditions to stop this federated learning and retraining process.

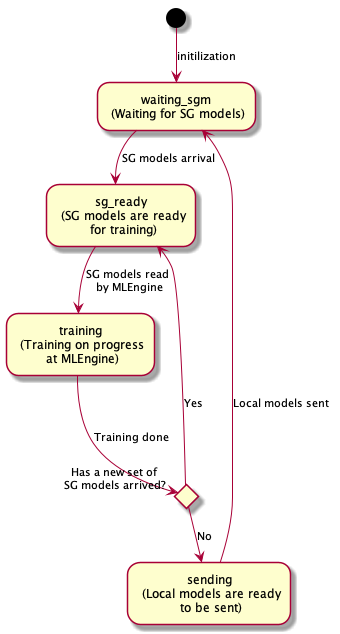

Client-Side Local Training Cycle

It may be helpful to understand the FL client states when integrating STADLE to your ML applications. Figure below is the state transition of an agnet for local ML training.

While an agent is waiting for the SG model (waiting_sgm state), the agent queries the aggregator for updates to the global model (a.k.a. ML model exchanged between the aggregator and agent). Basically, a polling method is used to query the updated global model every seconds/minutes/hours/days.

If the SG model is available (sg_ready state), the agent downloads the synthesized SG model that has been updated by the aggregator. These parameters of the SG models can be merged with the local ML model that is to be trained. Before the agent feeds the downloaded SG model to its ML model, the agent can calculate an output and store the new input and the feedback from the process.

The agent can proceed with the local (re)training process (training state). After the training is done, if the agent has received a new updated SG model, it basically throw away the retrained model and use the new SG model for retraining. In this case, the agent goes back sg_ready state.

Updates made to the ML model is cached so it can be sent to the aggregator when local training is done. Then, the agent sends its updated local ML model to an aggregator by setting the agent state as sending.

Ready to get started? Great! Click here for Quickstart.